It started with a Google search, how did it end up like this?

Students can be the greatest teachers. I love learning new ideas from them. Sometimes though, what they share with me worries me. Teaching Civics gives me the opportunity to talk about the news and current events with my students pretty much on a daily basis. In those conversations, I get to find out what they listen to or read to find out about this information. After exploring the topic of algorithms this week, I started questioning my own understanding of how we get our information, especially those that are often on social media sites, myself included.

|

| Image Source: ABC News |

I listened to an episode of a podcast called Factually! with Adam Conover on the biases of search engines, like Google, where he interviewed UCLA Professor Safiya Noble. In the episode, Noble mentions that Google became, "the people's public library." Anyone and everyone can access it at any given time, and for most, this has become the go-to site for finding information. "Just Google it," we say, putting complete trust in what Google will present back to us, sometimes clicking on the first or second hit. I too, do this. Why would a site with so much information try to give me misinformation?

However, Google and social media sites are heavily dominated by advertisements and can spread dangerous misinformation, that has real-world, and sometimes violent, consequences. It's not just the sites figuring out what it is the user is responding to through clicks, shares, and likes, racism and sexism have been amplified and have been vigorously shared to slip through supposed "filters." It's beyond the numbers of the algorithm that keeps suggesting content to us based on what we view, it goes beyond those patterns. Conover mentions in the podcast episode that online platforms are almost like, "a giant human system that sounds a lot like the rest of human society where racism, sexism and other forms of discrimination will of course exist." Of course, algorithms reflect this. They reflect us. The parts of society we blissfully ignore for the sake of profit, power, and comfort.

I am deeply concerned about what my students, and young people overall, are intaking as part of their online behavior. I know they scroll aimlessly at times, without ever questioning what it is they are reading and viewing. As their Civics teacher, I do my best to dispel harmful information and challenge their viewpoints. I don't sit them out of the conversation, I welcome them to have them with me. Just today, when giving a lesson on the types of society members we have in our communities, a student put in the chat, "everyone let's start a protest, whoops I mean a riot." I know this student is repeating verbatim what they have seen online, thanks to what can spread online and from what is being recommended to them. Not only is this a problem for their age group, but my students are also members of a marginalized community and of a target demographic where racist dog whistles and information taken out of context can be tolerated, or accepted without much thought.

|

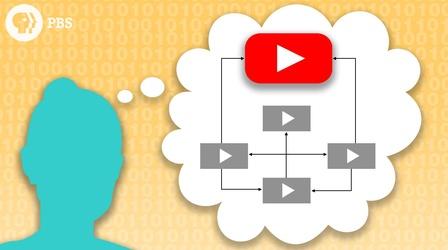

| Image Source: Above the Noise | PBS.org |

I don't have the right answers to any of this, but I know what I can do. My classroom can become a brave space. One where we challenge not only ourselves but the information we take in. Before debates we hold in our classroom, I usually show a video on the topic provided by Above the Noise. In their video on How to Avoid the Rabbit Hole of YouTube, Professor Noble reappears again to suggest what are better ways we can engage while searching for information online. Not only are these great tips for students as they learn to navigate the world online, especially the video which I plan to show to my students in a media literacy lesson, but tips for all of us.

- "Be careful with your search words."

- "Watch out for odd or unrelated search results."

- "Get off YouTube and find other sources of information, and not just Google, either."

Brenda, this is a fantastic post. You are so lucky to be in such an important role with students where this topic doesn't come off as an adult trying to tell them to behave. I would love to figure out a way to teach how algorithms work to kids in a hands on way. Right now the only thing I can think is to come up with a topic- have the students write one sentence each either pro/con on the topic. You, as the teacher, read and categorize their comments into all positive/all negative and then hand pick two students. Give one all the positive comments and the other all the negative comments. This is a way to "act" as an algorithm where YOU (aka the algorithm) decide what news they see- which might not reflect what their friends see.

ReplyDelete